| Description | Tasks | Data | Submissions | 2024 | 2023 |

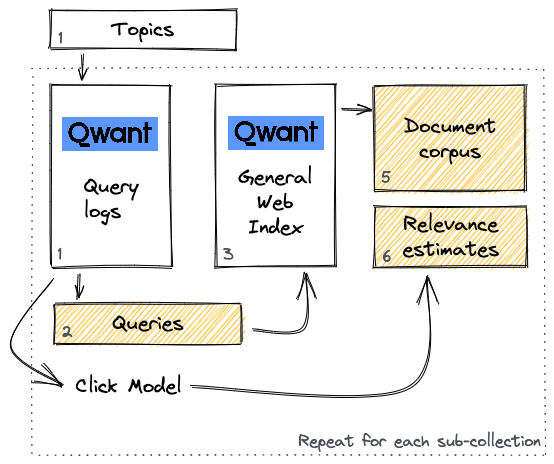

The data for this task is a sequence of web document collections and queries provided by Qwant.

Collection Download: You can find the Training and Testing collection here: (on the TU Wien Research Data Repository)The full collection is provided and processed to map queries and documents with unique IDs. This mapping is different from previous LongEval versions; therefore, it is recommended not to use the collections released in LongEval 2023 and 2024 for training.

The purpose of providing the full collection without too much sampling is to give participants full freedom and to provide the IR community with a comprehensive collection for future testing of their methods.

Description of the DataQueries:

The queries are extracted from Qwant’s search logs, based on a set of selected topics. The query set was created in French.

Documents:

The document collection includes all documents that have been displayed in SERPs for the selected queries. Filters were applied to exclude adult content from the collection. A collection.db file (SQLite) is provided to the participants with the mapping of the entire collection (URLs) to the document IDs. This mapping ensures that every document has its own unique ID across the whole collection. The structure of the table 'mapping' is as follows:

(0, 'id', 'INTEGER', 0, None, 1)

(1, 'url', 'TEXT', 0, None, 0)

(2, 'last_updated_at', 'TEXT', 0, None, 0)

(3, 'date', 'TEXT', 0, None, 0)

Record with ID 1:

{

"id": 1,

"url": "https://www.blogduvoyage.fr/roadtrip-usa-conseils/",

"last_updated_at": [

1640160479,

1640160479

],

"date": [

"2022-06",

"2022-06"

]

}

Participants can check detailed information of the document, such as when it was last updated and where the document is located.

Relevance estimates:

The relevance estimates for LongEval-Retrieval are obtained through automatic collection of user implicit feedback. This implicit feedback is obtained with a click model, based on Dynamic Bayesian Networks trained on Qwant data. The output of the click model represents an attractiveness probability, which is turned to a 3-level scale score (0 = not relevant, 1 = relevant, 2 = highly relevant). This set of relevance estimates will be completed with explicit relevance assessment after the submission deadline.

The overview of the data creation process is displayed in the Figure below:

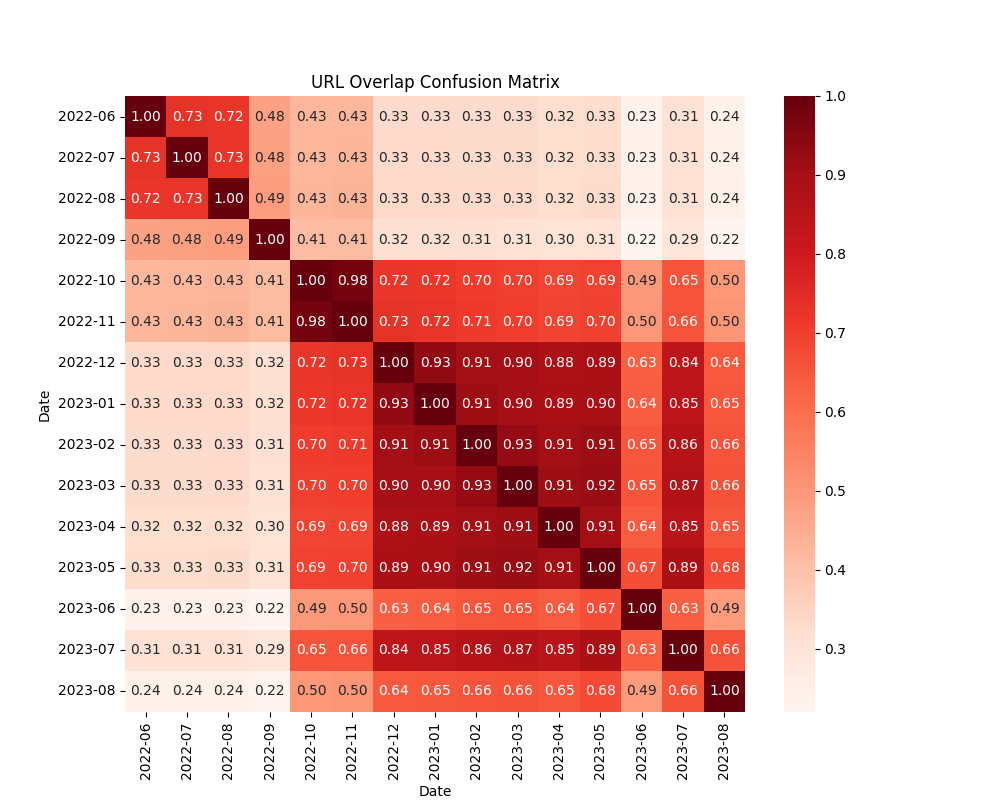

The figure below shows the overlap of documents across the full collection:

More details about the collection can be found in a paper: P. Galuscakova, R. Deveaud, G. Gonzalez-Saez, P. Mulhem, L. Goeuriot, F. Piroi, M. Popel: LongEval-Retrieval: French-English Dynamic Test Collection for Continuous Web Search Evaluation.

This collection consists of queries and documents extracted from the CORE scholarly literature search engine. The queries in this collection were issued by CORE users, and a specific pipeline was designed to capture user queries, their corresponding search results, and user interactions.

Note: Please ensure you are using an up-to-date version of curl. Older versions may cause download errors due to compatibility issues with the server.

You can use curl or wget to download any of the collections, strictly using the full address provided, for example:

Web Retrieval :

wget https://researchdata.tuwien.ac.at/records/th5h0-g5f51/files/Longeval_2025_Train_Collection_p1.zip\?download\=1\&preview\=1\&token\=eyJhbGciOiJIUzUxMiJ9.eyJpZCI6IjcwM2Y4MzQ0LTFlMDEtNDYxNy1iNDc4LTI5MmQ5MzYwNTU3NyIsImRhdGEiOnt9LCJyYW5kb20iOiI4NjYxMWFkODQzNDk2ZDk0NzllMDNlOWIyYWM1Zjc4NCJ9.YhnRV6WzWfQiuLQcGyTrA3gyI_5UBe9rtUAV6qKk5U7tqGEmD4NUdyfjGo2-U7tnBIlD7iTwUUDi0nw3GcXPmA

wget https://researchdata.tuwien.ac.at/records/th5h0-g5f51/files/Longeval_2025_Train_Collection_p2.zip\?download\=1\&preview\=1\&token\=eyJhbGciOiJIUzUxMiJ9.eyJpZCI6IjcwM2Y4MzQ0LTFlMDEtNDYxNy1iNDc4LTI5MmQ5MzYwNTU3NyIsImRhdGEiOnt9LCJyYW5kb20iOiI4NjYxMWFkODQzNDk2ZDk0NzllMDNlOWIyYWM1Zjc4NCJ9.YhnRV6WzWfQiuLQcGyTrA3gyI_5UBe9rtUAV6qKk5U7tqGEmD4NUdyfjGo2-U7tnBIlD7iTwUUDi0nw3GcXPmA

SciRetrieval:

wget https://researchdata.tuwien.ac.at/records/r643n-yc044/files/longeval_sci_training_2025_abstract.zip\?download\=1\&preview\=1\&token\=eyJhbGciOiJIUzUxMiJ9.eyJpZCI6ImYwNWI4NmQ5LTJhNTYtNGJlYy04OWE0LWE4OGU3OTA2ZTkwOSIsImRhdGEiOnt9LCJyYW5kb20iOiJhYWY1Yjg3MzE0NjQ1NDg1NjI3MzA0YzA3ZDQ0ZDAyOCJ9.ggBiT3HU9nhNcPwgM7NjAswFTwNdXSENnM25v6c0DMPwZ2cSeLjmJQH9ImUz6iW-8urif3r-TrygK-z5ZnNFiw

wget https://researchdata.tuwien.ac.at/records/r643n-yc044/files/longeval_sci_training_2025_fulltext.zip\?download\=1\&preview\=1\&token\=eyJhbGciOiJIUzUxMiJ9.eyJpZCI6ImYwNWI4NmQ5LTJhNTYtNGJlYy04OWE0LWE4OGU3OTA2ZTkwOSIsImRhdGEiOnt9LCJyYW5kb20iOiJhYWY1Yjg3MzE0NjQ1NDg1NjI3MzA0YzA3ZDQ0ZDAyOCJ9.ggBiT3HU9nhNcPwgM7NjAswFTwNdXSENnM25v6c0DMPwZ2cSeLjmJQH9ImUz6iW-8urif3r-TrygK-z5ZnNFiw